When you install a fresh copy of Firefox and run it for the first time, it opens a few tabs. For example, since August 2017, new installs of Firefox open the Firefox Privacy Notice in one tab on first run. The actual content can vary between versions and across platforms.

Last year we noticed some unusual traffic patterns on these pages: Lots of visitors came from countries like Finland that generally make up a tiny fraction of our traffic; many were on linux; and many came from ISPs named after the world’s largest data center operators.

If you’ve done much web engineering, you’re probably running some variation of this heuristic in your mind:

- Do I know anyone who uses a remote linux box in a data center for normal web browsing? (Maybe.)

- Do I know enough people who do that to make any kind of blip in the large volume of traffic that visits www.mozilla.org? (Nope.)

- What else might explain lots of linux boxes installing Firefox and running it? (Testing automation, perhaps?)

- What testing automation tools drive browsers? (Selenium WebDriver!)

At this point we’re back to the title of this piece: Can you detect WebDriver sessions from inside a web page? Because that sure would be interesting, right? If your website gets a lot of testing bot traffic, and you could detect it, then you would know more about your site’s traffic, for example. Or maybe you could do something more interesting with bot traffic than just show it whatever content it requested. Etc.

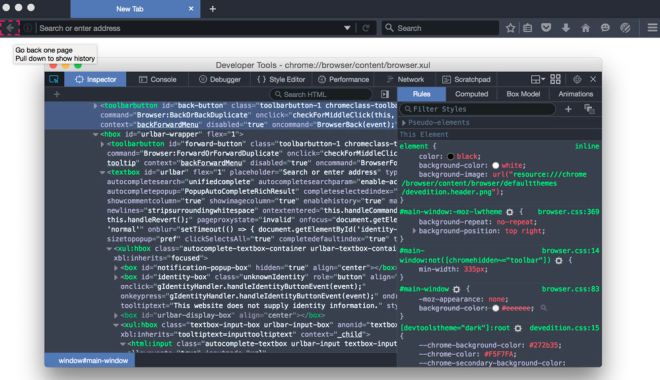

Lucky for us, we’re not the first to ask this question. Lots of people have asked it before. The best answers I found in my own searches are over on StackOverflow in response to “Can a website detect when you are using selenium with chromedriver?” and “Selenium WebDriver is detectable“. Answers to those questions suggest an entire suite of client-side tests we could run to discover if WebDriver is driving a browser. For example, one respondent says that the html tag of the document will include an attribute added by WebDriver (or by geckodriver, the Firefox proxy for WebDriver). Another suggests testing the presence of navigator.webdriver. And so on.

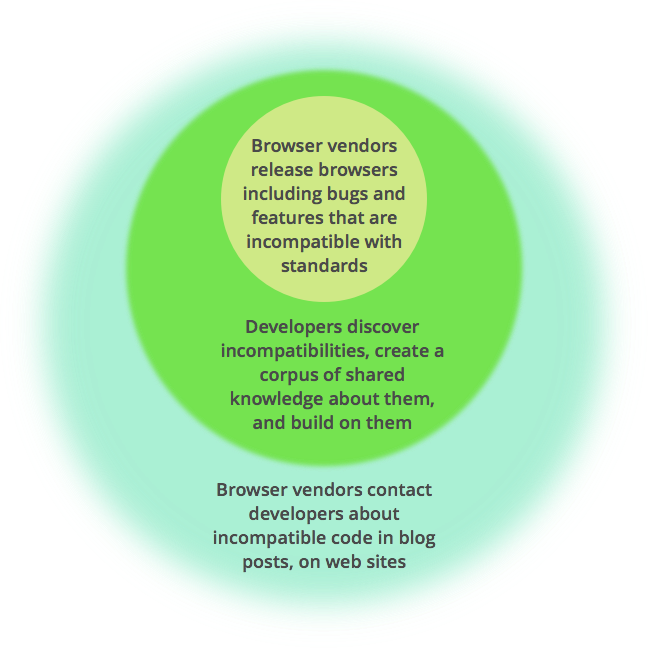

Other responses to the question say it’s impossible. Their line of reasoning goes like this: WebDriver is running a browser for the purposes of simulating real browsing activity, and a good simulation should be indistinguishable from the authentic thing, so of course WebDriver should be undetectable.

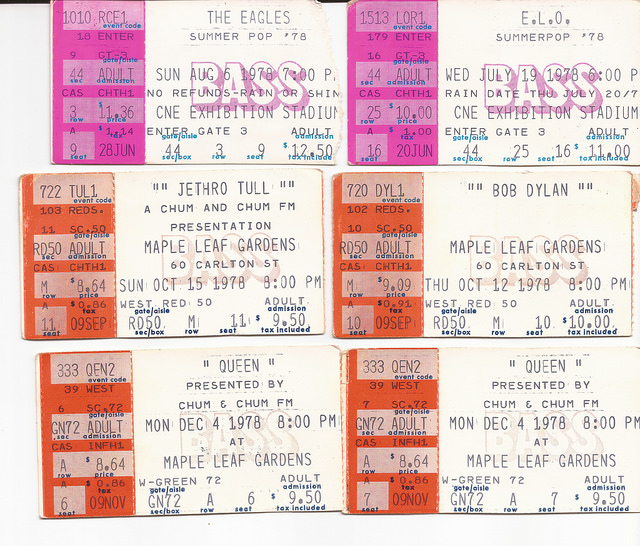

One interesting feature of these conversations is they appear to include lots of people who want to be sure their bot activity won’t be detected by whatever website they point it at. For example, one of the most detailed responses explains how the respondent circumvented detection on a site that sells concert tickets. “It worked,” they say. It would appear that my own use case is rather tame, in comparison.

Well, there was enough controversy around the topic to inspire a bit of coding on my part. I built a trivial little tool that runs all the tests I could find; if any of the tests can discern WebDriver at the controls, my WebDriver test would pass.

Digression: While the code itself is trivial, getting it to that state was definitely not. I code so rarely these days that I have to reestablish my development environment each time. That means I end up with the latest versions of e.g. node.js and the node bindings for WebDriver and the Jasmine testing framework. And that means I run into all manner of incompatibility among libraries and between documented/example usage and actual usage. It feels like exploring a new continent full of undiscovered affordances.

Nevertheless, I eventually banged out some code that appears to run. And then I ran it.

So, can you detect WebDriver sessions from inside a web page?

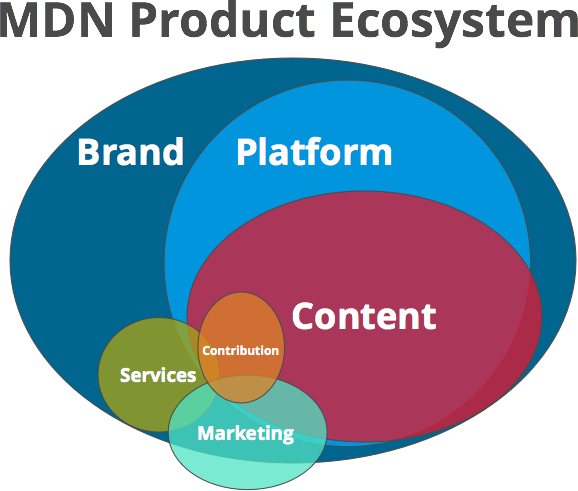

Before I get to that I want to point out that I have the great luck to work at an amazing company. I get to rub shoulders (or whatever the IRC equivalent of that is) with people who help shape the future of our most critical technologies (by way of specifications and implementations) and help determine technology’s role in society (by way of advocacy, education and straight-up lawsuits against the federal government, when necessary), and that makes me proud.

Some of the people I work with contribute a lot to automated testing tools like WebDriver. They are very patient when someone like me bungles into their IRC channel to ask this sort of thing. So when my tests completely failed to ascertain whether a bot was at the wheel of my browser, I reached out to those folks and asked: Should I be able to detect WebDriver sessions?

And they said, “Probably not.” Which was my experience, too.

A patch is in the works to expose an attribute on navigator, such that navigator.webdriver !== false, when geckodriver is driving, and when the person who launched geckodriver has not made any code changes to obscure their ticket-scalping side business.

Also, several companies market tools designed to “fingerprint” a browser, because doing so creates all sorts of opportunity to trade the identity of the browser (and the person running it) for profit. (It’s like silver mining, except the miners ride Uber instead of mules and the silver is extracted from your soul!) Of course such technology might also be used to thwart networks of bots, since bots can theoretically be identified in the same manner.

Meanwhile, if you just want to add some simple JavaScript to your page so you can innocently ask a browser whether its driver is a robot, you’re out of luck. For now.